A recent blog post highlighted a Microsoft technical report which asserts that most Hadoop workloads are 100 GB or smaller, and for almost all workloads except the very largest “a single ‘scale-up’ server can process each of these jobs and do as well or better than a cluster in terms of performance, cost, power, and server density.” It’s certainly true that Hadoop MapReduce seems to have focused more on clustering issues than on single-server optimizations. But — to paraphrase Mark Twain — reports of scale-out’s demise for all but the largest workloads are greatly exaggerated.

Scale-Up Versus Scale-Out

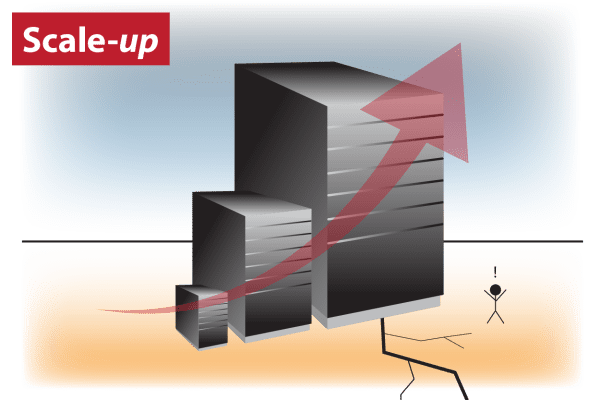

Scaling up is the process of migrating to an increasingly more powerful single server to process a workload faster or to handle a growing workload that fits within the server. Usually this means moving to a server with more CPU cores, greater memory capacity, and higher-end networking and storage options.

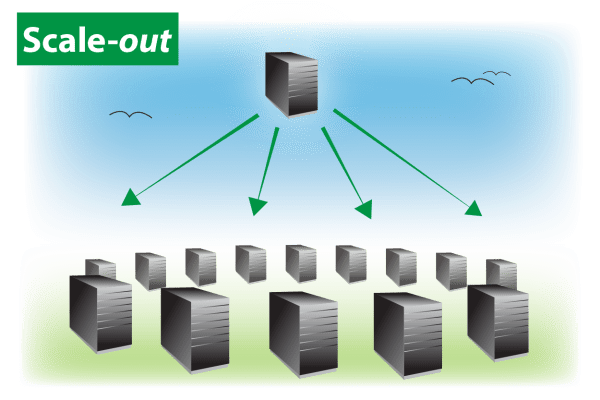

At some point, scaling up becomes costly, and workloads grow beyond what even a high end server can handle. To overcome this limitation, scaling out distributes a workload across a cluster of servers working together. Now CPU, memory, and storage resources can grow without predefined limits. Unless network bandwidth also grows proportionally to the number of servers, it usually becomes the limiter in scaling. (Supercomputing systems use scalable networks such as torus interconnects; commodity clusters typically use Ethernet switches with fixed bandwidth.)

There are several reasons why scaling out will continue to serve an important role, and to be sure, it’s more than the size of the workload that matters. First, scaling out enables computing capacity to be deployed incrementally and economically as the size of the workload increases using clusters of relatively small servers (instead of investing in a single, expensive multicore server to handle the highest anticipated workload). Second, scale-out’s ability to provide high availability will always be important to mission-critical applications, even if the problem size fits within one server. Third, technology changes so fast that today’s pricey, top of the line server will quickly become tomorrow’s dusty objet d’art as it awaits recycling. (Cloud computing just changes that to a per-hour calculation – top-of-the-line servers may not be cost-effective.)

Moreover, in our experience, many analytics applications host data sets much larger than the 100 GB size Microsoft cites as a typical upper bound, and new applications drive this trend by taking advantage of newly available memory. (We often host data sets in the terabytes in our in-memory data grid.) These data sets require a cluster to keep in memory. For example, a recent data set used in an e-commerce analytics application held 40 million objects totaling about 2 TB of data including replicas. This data set could not be stored in one server on Amazon EC2, even using their largest instance type; it required a cluster of servers to hold the entire data set in memory. Also, it’s often not advisable to store such large data sets in the smallest possible cluster of servers; there are benefits to using a larger cluster of small servers (see below).

That said, it’s clear that scale-out infrastructures, including middleware execution platforms like Hadoop, need to evolve to make full use of large memory capacity and many cores available within each server. As Hadoop gained popularity over the last few years, software architects have been focused on efficiently scaling out with minimum overhead, and the Microsoft paper reminds us to rethink our scale-up algorithms and extract maximum value out of new technology as it enters the mainstream. For example, fully using available cores with multi-threading and minimizing latency for inter-process data transfers with memory-mapped files can squeeze more performance out of modern servers.

Finding the Right Balance

Once we accept that scale-out is an integral element of mission-critical deployments, it’s finding the right balance between memory, CPU, and network bandwidth that matters in driving overall performance. One or more of these resources tends to lag in performance at any point as technology’s evolves, and software design has to compensate for that. For example, right now network bandwidth in commodity networks tends to be the laggard, making it costly (in time) to ship the 100s of gigabytes a server can now hold. (For example, a 10 Gbps network requires 80 seconds at maximum bandwidth to send 100 gigabytes between servers; scatter/gather of many objects greatly increases that time.) As the amount of data stored in each server grows, load-balancing between servers takes longer, and the delays eventually can impact availability. Scaling out can help this by distributing the data set across more servers, thereby reducing network delays in rebalancing workloads after a server is added or removed.

In sum, it’s not “either-or” — scale-out is here to stay. However, it’s absolutely true that maintaining support for the latest memory and processor technology is crucial to reaping the benefits of scale-up, integrating it with scale-out, and thereby maximizing performance, availability, and cost-effectiveness.