Companies with large numbers of geographically distributed assets increasingly need intelligent real-time monitoring to keep operations running smoothly. Consider a retail chain of stores or restaurants with tens of thousands of outlets. (Walgreens has more than 9,000, and McDonald’s has more than 14,000 in the U.S. alone.) Each store has mission-critical equipment, such as refrigerators and automatic doors which must operate properly at all times, as well as dynamic staffing and inventory challenges. To keep operations efficient and cost-effective, it’s important to be able to quickly respond to issues as they occur and efficiently verify their resolution. In addition, real-time information on sales and inventory gives managers new tools that help optimize overall performance and maximize profits.

Traditional platforms for streaming analytics don’t offer the combination of granular data tracking and real-time aggregate analysis that logistics applications in operational environments such as these require. It’s not enough just to pick out interesting events from an aggregated data stream and then send them to a database for offline analysis using Spark. What’s needed is the ability to easily track incoming telemetry from each individual store so that issues can be quickly analyzed, prioritized, and handled. At the same time, it’s important to be able to combine data and generate analytics results for all stores in real time so that strategic decisions, such as running flash sales or replenishing hot-selling inventory, can be made without unnecessary delay.

The answer to these challenges is a new software concept called the “real-time digital twin.” This breakthrough approach for streaming analytics lets application developers separately track and analyze incoming telemetry from each individual store while the platform handles the task of filtering out telemetry associated with other stores. This dramatically simplifies application code and automatically scales its use by letting the execution platform run this code simultaneously for all stores. In addition, the platform provides fast, in-memory data storage so that the application can easily and quickly record both telemetry and analytics results for each store. Lastly, built-in, aggregate analysis tools make it easy to immediately roll up these analytics results across all stores and continuously keep track of the big picture.

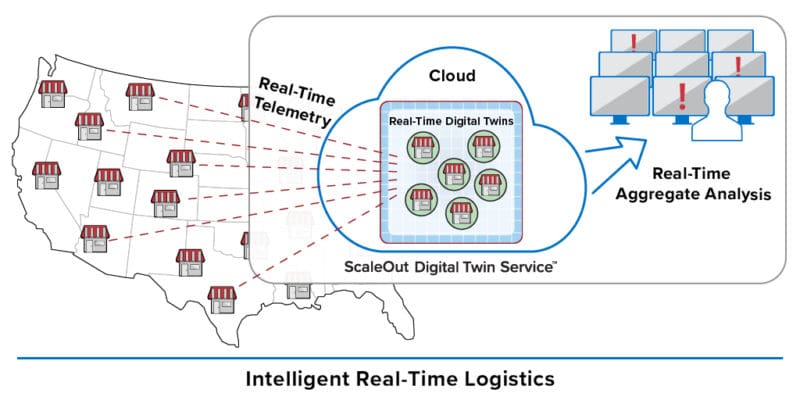

The ScaleOut Digital Twin Streaming Service™, which runs in the Microsoft Azure cloud, hosts real-time digital twins for applications like these that need to track thousands of data sources. It can receive telemetry from retail stores over the Internet using event delivery systems such as Azure IoT Event Hub, AWS, Kafka, and REST, and it can respond back to the retail stores in milliseconds. In addition, the cloud service hosts aggregate analytics that can continuously complete every few seconds, avoiding the need to wait for offline results from a data lake.

The following diagram illustrates a nationwide chain of retail outlets streaming telemetry to their counterpart real-time digital twins running in the cloud service. It also shows real-time aggregate results being fed to displays for immediate consumption by operations managers.

With this ground-breaking technology, operations managers now can easily manage logistics for thousands of retail outlets, immediately identify and respond to issues, and optimize operations in real time. By harnessing the power and simplicity of the real-time digital twin model, application developers can quickly implement and customize streaming analytics to meet these mission-critical requirements. With the real-time digital twin model, the next generation of streaming analytics has arrived.

Thank you for your guide it will help me a lot to improve my knowledge about Logistics Software and its uses.