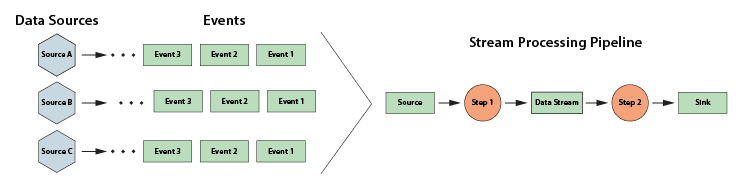

Designing applications that extract real-time insights from streaming telemetry can be a daunting challenge. Event streams typically combine messages from many data sources, as shown below. It’s a complex challenge to select and analyze messages that surface patterns of interest. For these reasons, most streaming applications only perform rudimentary analysis (often in the form of queries) on the incoming data stream and push most of the event messages into a data lake for offline examination.

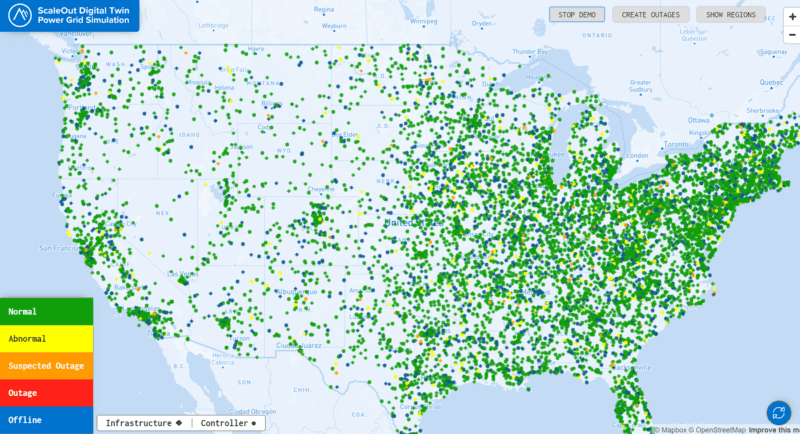

The problem with this approach is that important insights requiring quick action are not immediately available. In applications which require real-time responses, situational awareness suffers, and decision making must wait for human analysts to wade through the telemetry. For example, consider a large power grid with tens or hundreds of thousands of nodes, such as power poles, controllers, and other critical elements. When outages (possibly due to cyber-attacks) occur, they can originate simultaneously in numerous locations and spread quickly. It’s critical to be able to prioritize the telemetry from each data source and determine within a few seconds the scope of the outages. This maximizes situational awareness and enables a quick, strategic response.

The following diagram with 20,000 nodes in a simulated power grid gives you an idea of how many data sources need to be simultaneously tracked and analyzed:

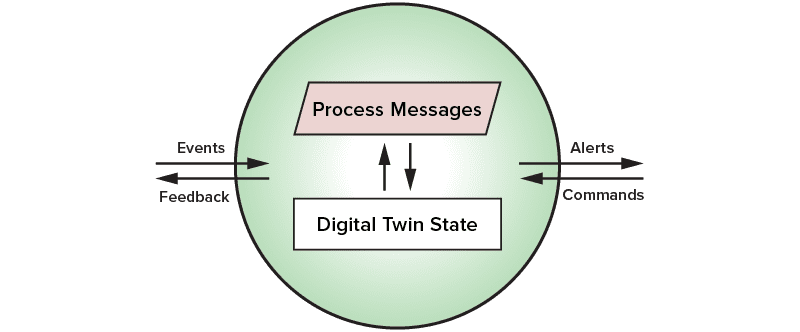

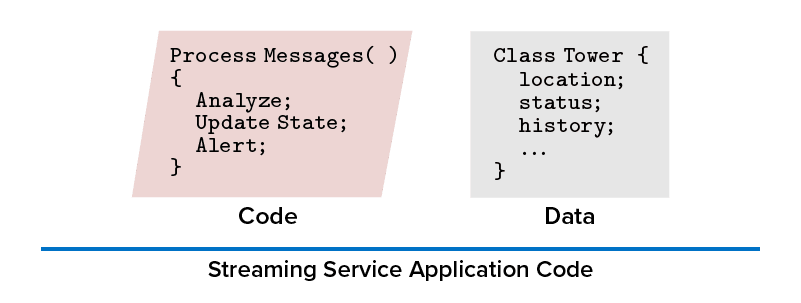

The solution to this challenge is to refactor the problem so that application code can ignore the overall complexity of the event stream and focus on using domain-specific expertise to analyze telemetry from a single data source. The concept of “real-time digital twins” makes this possible. Borrowed from its usage in product life-cycle management and simulation, a digital twin provides an object-oriented container for hosting application code and data. In its usage in streaming analytics, a real-time digital twin hosts an application-defined method for analyzing event messages from a single data source combined with an associated data object:

The data object holds dynamic, contextual information about a single data source and the evolving results derived from analyzing incoming telemetry. Properties in the data objects for all data sources can be fed to real-time aggregate analysis (performed by the stream-processing platform) to immediately spot patterns of interest in the analytic results generated for each data source.

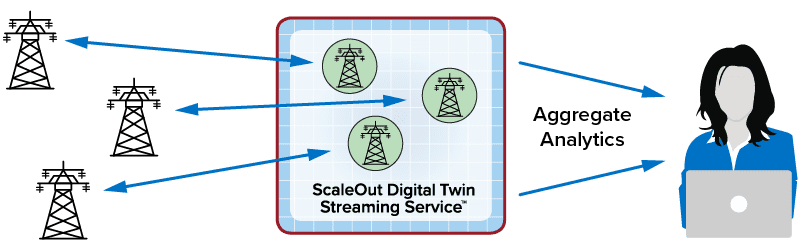

Here is an illustration of real-time digital twins processing telemetry from towers in a power grid from the above example application:

Real-time digital twins dramatically simplify application code in two ways. First, they automatically handle the correlation of incoming event messages so that the application just sees messages from a single data source. Second, they make contextual data immediately available to the application. This removes a significant amount of application plumbing (and a source of performance bottlenecks) and hides it in the execution platform. Note that the platform can seamlessly scale its performance by running thousands of real-time digital twins in parallel.

The net result is that the application developer only needs to write a single method that embeds domain-specific logic that can interpret telemetry from a given data source in the context of dynamic information about that specific data source. It can then signal alerts and/or send control messages back to the data source. Importantly, it also can record the results of its analysis in its data object and subject these results to real-time, aggregate analytics.

In the above power grid example, the application requires only of a few lines of code to embed a set of rules for interpreting state changes from a node in the power grid. These state changes indicate whether a cyber-attack or outage is suspected or another abnormal condition exists. The rules decide whether this node’s location, role, and history of state changes, which might include numerous false alarms, justify raising an alert status that’s fed to aggregate analysis. The code runs with every incoming message and updates the alert status along with other useful information, such as the frequency of false alarms.

The real-time digital twin effectively filters incoming telemetry using domain-specific knowledge to generate real-time results, and aggregate analysis, also in real-time, intelligently identifies the nodes which need immediate attention. It does this with a surprisingly small amount of code, as illustrated below:

This is one of many possible applications for real-time digital twins. Others include fleet and traffic management, healthcare, financial services, IoT, and e-commerce recommendations. ScaleOut Software’s recently announced cloud service provides a powerful, scalable platform for hosting real-time digital twins and performing aggregate analytics with an easy to use UI. We invite you to check it out.